Cognitive Assessment by Telemedicine: Reliability and Agreement between Face-to-Face and Remote Videoconference-Based Cognitive Tests in Older Adults Attending a Memory Clinic

Article information

Abstract

Background

The coronavirus disease 2019 (COVID-19) pandemic has spurred the rapid adoption of telemedicine. However, the reproducibility of face-to-face (F2F) versus remote videoconference-based cognitive testing remains to be established. We assessed the reliability and agreement between F2F and remote administrations of the Abbreviated Mental Test (AMT), modified version of the Chinese Mini-Mental State Examination (mCMMSE), and Chinese Frontal Assessment Battery (CFAB) in older adults attending a memory clinic.

Methods

The participants underwent F2F followed by remote videoconference-based assessment by the same assessor within 3 weeks. Reliability was evaluated using intraclass correlation coefficients (ICC; two-way mixed, absolute agreement), the mean difference between remote and F2F-based assessments using paired-sample t-tests, and agreement using Bland-Altman plots.

Results

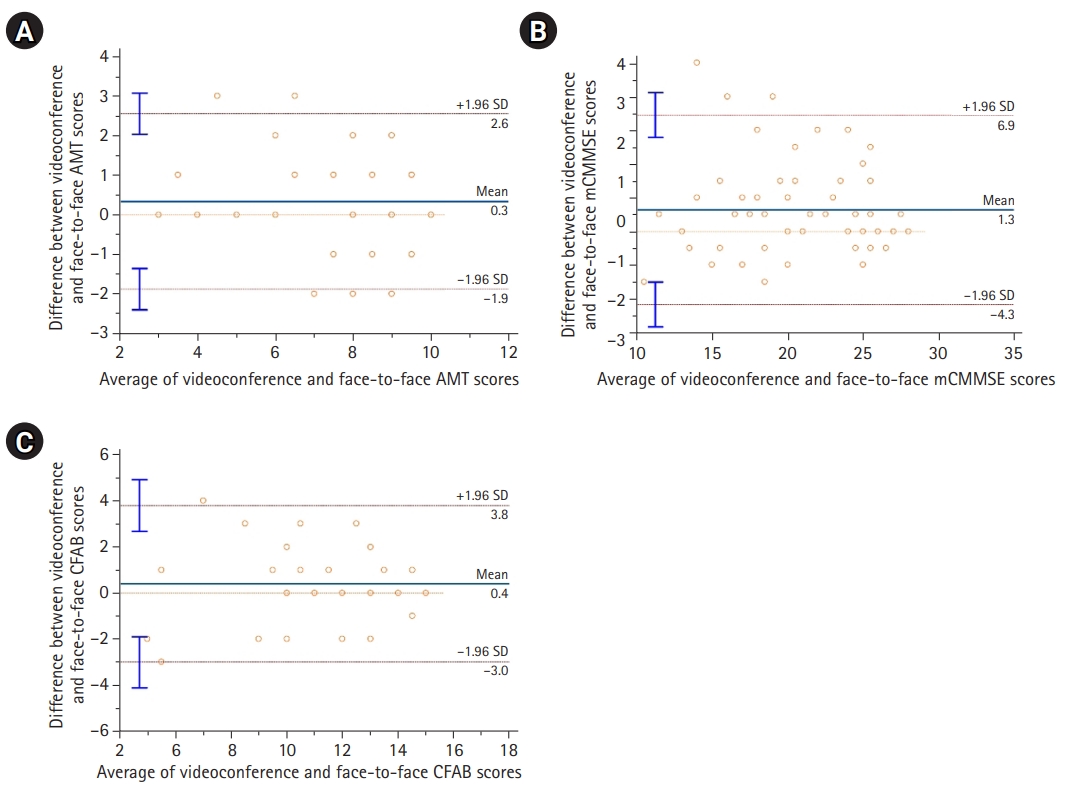

Fifty-six subjects (mean age, 76±5.4 years; 74% mild; 19% moderate dementia) completed the AMT and mCMMSE, of which 30 completed the CFAB. Good reliability was noted based on the ICC values—AMT: ICC=0.80, 95% confidence interval [CI] 0.68–0.88; mCMMSE: ICC=0.80, 95% CI 0.63–0.88; CFAB: ICC=0.82, 95% CI 0.66–0.91. However, remote AMT and mCMMSE scores were higher compared to F2F—mean difference (i.e., remote minus F2F): AMT 0.3±1.1, p=0.03; mCMMSE 1.3±2.9, p=0.001. Significant differences were observed in the orientation and recall items of the mCMMSE and the similarities and conflicting instructions of CFAB. Bland–Altman plots indicated wide 95% limits of agreement (AMT -1.9 to 2.6; mCMMSE -4.3 to 6.9; CFAB -3.0 to 3.8), exceeding the a priori-defined levels of error.

Conclusion

While the remote and F2F cognitive assessments demonstrated good overall reliability, the test scores were higher when performed remotely compared to F2F. The discrepancies in agreement warrant attention to patient selection and environment optimization for the successful adaptation of telemedicine for cognitive assessment.

INTRODUCTION

The coronavirus disease 2019 (COVID-19) pandemic hastened the shift to telemedicine to maintain continuity of care while mitigating the risks of exposure.1,2) However, despite the growing presence of telehealth services, limited data exists regarding the validity of telemedicine-based cognitive testing, particularly in cognitively impaired older adults.

Previous studies have described the utility of telemedicine for the diagnosis of dementia.3) Others have evaluated the reliability of the remotely administered Mini-Mental State Exam (MMSE)4) and the Montreal Cognitive Assessment tool (MoCa); however, these studies were predominantly conducted in younger individuals or specific clinical conditions such as post-stroke.5) Moreover, few studies have evaluated a battery of telemedicine-based cognitive tests in the vernacular of Asian populations, particularly in older adults with cognitive impairment. In this regard, it is important to establish the reliability and agreement between telemedicine-based and face-to-face (F2F) cognitive assessments to support the validity of remote cognitive testing in older adults in preparation for future public health emergencies6) or in other settings where distance limits access to timely healthcare.

Therefore, we aimed to determine the reliability and agreement between F2F and remote videoconference-based assessments of three commonly used cognitive screening tools; namely, the Abbreviated Mental Test (AMT), the modified version of the Chinese MMSE (mCMMSE), and the Chinese Frontal Assessment Battery (CFAB), among older adults with known or suspected cognitive impairment.

MATERIALS AND METHODS

Participants and Setting

We recruited 60 community-dwelling older adults presenting with known or suspected cognitive impairment using a convenience sample of patients attending a tertiary hospital memory clinic. The ethics committee of the National Healthcare Group Domain Specific Review Board reviewed and approved this study (No. 2020/00609).

Inclusion and Exclusion Criteria

We included participants aged 65 years and older who could understand English or Mandarin and could independently use WhatsApp Messenger video calls (https://whatsapp.com), or had caregivers to assist them. We excluded individuals with severe hearing or visual impairments or those with severe behavioral and psychological symptoms precluding assessment.

Data Collection

The participants completed two visits, an F2F visit followed by a remote assessment, scheduled 2–3 weeks after the F2F visit. The AMT and mCMMSE were performed by trained nurses specializing in cognition and memory disorders, followed by an assessment of CFAB by a physician running the memory clinic. For each participant, the same nurse and physician performed the F2F and remote assessments. All raters underwent standardization training before the study.

Upon consenting to the study, all participants and their caregivers (if present) were briefed on the conditions under which videoconferencing would occur. An information sheet was provided that described a standardized setting with adequate lighting; absence of visual orientation cues such as clocks, watches, or calendars; and a quiet environment.

We collected baseline demographic data (age, sex, education level, and first language). Dementia diagnosis using the Diagnostic and Statistical Manual of Mental Disorders fourth edition (DSM-IV) criteria and severity using the locally validated Clinical Dementia Rating (CDR)7) were rated by the participants’ physicians.

Cognitive Assessment

Various items on the cognitive tests were adapted for telemedicine, including clarifying the phrasing of questions and accommodating the different locations of the participants and assessors during remote assessment (Table 1). Modifications were also made to the three-stage command and the “read and obey” items of the mCMMSE to avoid participant responses outside of camera view. For the CFAB, the final item, “environmental autonomy,” was omitted because it necessitates physical contact between the assessor and the participant. This was also supported from the psychometric standpoint, as this item loaded poorly and, when removed, improved the internal consistency of FAB.8) Thus, the final scores for both the F2F and remote CFAB excluded this item.

Statistical Analysis

For a hypothesized intra-class correlation (ICC) between remote and F2F assessments of 0.809) against a null value of 0.60 and an alpha value of 0.05, a minimum sample size of 50 participants was required to achieve a power of 0.80.

We analyzed the reliability of the remote and F2F assessments based on ICC values (two-way, mixed, absolute agreement).10) Values <0.5 indicated poor reliability, 0.5–0.75 moderate reliability, 0.75–0.9, good reliability; and >0.9 excellent reliability.10) Differences between F2F and remote cognitive scores were also examined using the paired samples t-tests. We then evaluated the agreement between the F2F and remote scores using Bland-Altman plots. These plots illustrated the agreement between F2F and remotely administered measures of each cognitive test by plotting the differences between F2F and remote scores against the mean. The two horizontal dotted lines indicate the 95% limits of agreement, which were estimated by the mean difference ±1.96 times the standard deviation of the differences.

The a priori-defined acceptable limits of agreement were ±1, ±2, and ±2 for AMT, mCMMSE, and CFAB, respectively. These limits were based on previous data demonstrating a minimal clinically important difference (MCID) of >1 for AMT11); for the MMSE, the reported MCID ranges from 1 to 312); thus, an average of 2 was used. Limited information exists on the MCID for CFAB; therefore, a consensus was reached to define a score difference of ≥2 as having a significant effect on clinical outcomes.

The data were analyzed using IBM SPSS Statistics for Windows, version 27.0. (IBM Corp., Armonk, NY, USA) and MedCalc for Windows, version 20.013 (MedCalc Software, Ostend, Belgium).

RESULTS

Of the 60 participants who consented to participate in this study, 56 (93.3%) completed both the F2F and remote assessments. Four participants were unable to complete the remote assessment—change of mind by participant and family (n=2), caregiver unable to commit to assisting the participant (n=1), dental condition (n=1). Thirty participants completed both the F2F and remotely administered CFAB. The mean±standard deviation duration between F2F and remote assessments was 17.7±3.2 days. Thirty-eight participants (68%) required assistance from their caregivers for the remote assessment.

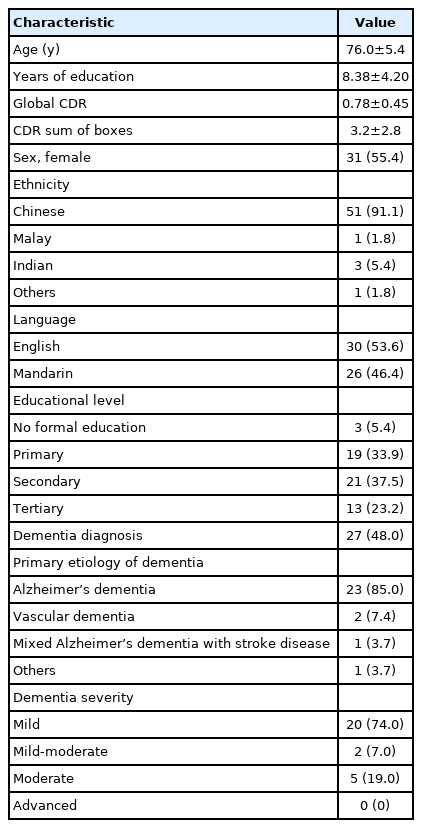

Table 2 shows the demographic and clinical characteristics of the study population. Most of the participants were female and of Chinese ethnicity. The mean education level was 8.38±4.2 years, corresponding to a secondary school level. Cognitive tests were conducted in English for 30 participants (53.6%) and Mandarin Chinese for 26 (46.4%). Almost half of the participants had a pre-existing diagnosis of dementia, with Alzheimer’s dementia (AD) the primary etiology in 21 participants (78%). Dementia was rated based on the CDR scale, with most cases of mild severity.

Table 3 shows the mean differences between F2F and remotely administered AMT, mCMMSE, and CFAB, with their respective ICC values. Participants scored higher during remote testing than during F2F for AMT and mCMMSE, with AMT significantly higher by 0.3±1.1 (p=0.029) and mCMMSE by 1.3±2.9 (p=0.001). No significant differences were observed between F2F and remotely administered CFAB mean scores.

All three assessments demonstrated good to excellent levels of reliability, with ICC values of 0.80 (95% confidence interval [CI] 0.68–0.88), 0.80 (95% CI 0.63–0.88), and 0.82 (95% CI 0.65–0.91) for AMT, mCMMSE, and CFAB, respectively.

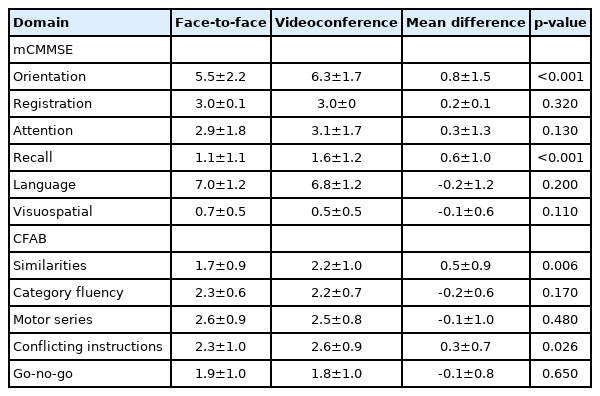

Table 4 shows the differences in the F2F versus remote mCMMSE and CFAB scores by domain. For mCMMSE, the participants scored 0.8±1.5 (p<0.001) and 0.6±1.0 points (p<0.001) higher during remote assessment in the orientation and recall domains, respectively. For CFAB, participants scored 0.5±0.9 points higher (p=0.006) for the similarities item and 0.3±0.7 points higher (p=0.026) for the conflicting instructions item.

Bland-Altman plots for AMT, mCMMSE, and CFAB are shown in Fig. 1A, 1B, and 1C, respectively. Almost all individual plots were within the 95% limit of agreement for all three cognitive tests. We observed evidence of systematic bias (remote minus F2F scores), with the overestimation of remote mCMMSE (bias=1.3, 95% CI -4.3 to 6.9) and AMT scores (bias=0.3, 95% CI -1.9 to 2.6), as shown in Table 3. The 95% limits of agreement were wide, ranging between -1.9 to 2.6 for AMT, -4.3 to 6.9 for mCMMSE, and -3.0 to 3.8 for CFAB, exceeding the a priori-defined levels of error. Notably, there were five outliers (test scores that exceeded the 95% limits of agreement) for AMT, three outliers for mCMMSE, and one outlier for CFAB. Though not reaching statistical significance, when compared to non-outliers, outliers showed a trend towards older age (78.9±4.1 vs. 75.5±5.5 years, p=0.60), greater severity of cognitive impairment (CDR global scores 1.1±0.7 vs. 0.7±0.4, p=0.82; CDR sum of boxes scores 5.1±3.3 vs. 2.8±2.6, p=0.71), and lower educational levels (7.3±5.9 vs. 8.6±3.8 years, p=0.35).

DISCUSSION

The present study adds to the growing body of evidence examining the validity of telemedicine for cognitive assessment in older adults. To our knowledge, this is the first study evaluating remote CFAB assessment. Specifically, remote videoconferencing-based administration of AMT, mCMMSE, and CFAB showed good reliability but only fair agreement with the F2F assessment. A small but significant bias was observed for AMT and mCMMSE between both assessment modalities, with remote scores higher than those of the F2F-based assessment. We also found wide limits of agreement for all three cognitive tests, exceeding our predefined limits for maximum acceptable differences. These were, in part, driven by outliers with extreme differences, particularly for the mCMMSE. When analyzed by cognitive domains, participants demonstrated higher scores via remote testing in the orientation and recall items of the mCMMSE and the similarities and conflicting instructions items of the CFAB.

Our findings demonstrating good reliability between F2F and remote cognitive testing are consistent with those of prior telehealth studies. Remote MMSE showed an excellent ICC of 0.9059) and a high correlation (r=0.90) with F2F administration.13) However, Loh, et al.13) also found wide 95% limits of agreement, ranging from -3.9 to 4.5, a finding also consistent with ours. Furthermore, we observed higher remote AMT and mCMMSE scores than that of those administered F2F. The possible explanations for this discrepancy include practice effects, which cannot be eliminated entirely. To mitigate this, we chose an interval of 2–3 weeks between F2F and remote assessments, as reported previously.14) This time interval sought to balance the possibility of practice effects if the second visit was scheduled too close to the first and to avoid longitudinal changes in test scores if repeat tests were spaced too far apart.14,15) In support of this time interval, a previous study demonstrated the stability of the MMSE for up to 6 weeks.16) However, future studies may counterbalance the order of F2F and remote assessments to minimize practice effects.

The higher scores observed during the remote assessment may also be attributed to the cues or prompts provided by the caregivers in our study. To preempt this, we conducted briefings before the remote assessments to ensure a quiet and distraction-free environment for videoconferencing. Nonetheless, for individuals with the largest discrepancies between the remote and F2F assessments, we observed that caregivers frequently prompted participants outside the camera field of view. In addition, the presence of environmental cues (clocks and calendars) may be another plausible reason for the higher remote testing scores, as reflected in the significantly better performance in the orientation domain when administered remotely. These findings underscore the need for an optimal environment for valid telehealth assessment.

The results of our study highlight the importance of employing various measures of reliability and agreement for the comprehensive evaluation of validity. While many studies have reported good correlations between remote and F2F assessments, high correlations are not synonymous with a good agreement and may fail to detect systematic bias, as observed in our study. Moreover, interpreting the ICC remains challenging owing to its inherent characteristics, which are largely determined by the heterogeneity of the sample such that when variance is high, the ICC is likely to be high, and vice versa.17) In our study, the wide range of mCMMSE scores may in part explain the high ICC estimates but do not necessarily reflect reliability and agreement between remote and F2F assessments. In contrast, the Bland-Altman plots provided a visual assessment of bias and agreement, enabling the analysis of individual data points and identifying outliers with large degrees of disagreement. Identifying outliers also allowed for further analysis to elucidate the reasons for the large discrepancies between remote and F2F assessments.

The present study evaluated the CFAB adapted for telemedicine, which incorporates motor tasks, including finger tapping and copying a series of hand movements. While the 95% limits of agreement for CFAB exceeded the a priori defined levels, our results still indicated that cognitive tests with motor components might be feasibly completed in a telehealth setting. To adapt the CFAB for remote administration, we omitted the final “environmental autonomy” item, which involved placing the examiner’s hands out and instructing the patient not to touch them, and observing for abnormal behavior such as imitation, utilization, and prehension behavior. Omitting this item is unlikely to significantly affect the validity of the CFAB, as demonstrated in a study revealing its limited utility in early cognitive impairment due to a ceiling effect present from normal to early dementia.8)

The strengths of this study include its use of various measures of reliability and agreement in a single study to evaluate validity with a sample size adequately powered for the primary objective. We also used consistent raters between subjects and standardized the testing procedures before commencing the study to minimize variability. We did not require the use of additional equipment beyond the participants’ smartphones. However, the limitations of our study include the lack of data on hearing and visual impairments and their impact on our results. Factors such as mood or behavior that may have influenced remote cognitive testing were not assessed in this study. Furthermore, our results are not generalizable to older adults from community-based populations with normal cognition or at moderate to advanced stages of dementia. Moreover, as our sample included individuals with access to smartphone devices, stable network connectivity, or caregivers who were available to assist (38 of the 56 participants needed caregivers), we were also unable to generalize our results to older adults across the spectrum of socioeconomic status and familiarity with technology.

Our study adds to the body of evidence evaluating the validity of telemedicine-based cognitive assessment, particularly in older adults with cognitive impairment. We also provide results from a “real-world” implementation of telemedicine in a clinical setting during the COVID-19 pandemic. Given the potential clinical and medicolegal ramifications of cognitive testing results, our results suggest that providers should cautiously adopt telemedicine-based cognitive assessments, with careful attention paid to ensure a conducive environment in which remote testing can occur. Nevertheless, during a pandemic that has disproportionately affected older adults, telemedicine serves an important need to maintain continuity of care in settings that face disruption of essential medical services. Further studies are needed to establish the validity of telemedicine for dementia diagnosis and treatment in a larger sample, evaluate the acceptance of telehealth in older adults, and increase access to telehealth services.

Acknowledgements

We acknowledge the patients of the TTSH GRM Memory Clinic who participated in our study and express our gratitude to the GRM Memory Clinic doctors (Drs. Chew Aik Phon, Khin Win, Esther Ho, Koh Zi Ying, Eloisa Marasigan, and See Su Chen), and nurses who helped in seeing our participants. The authors declare that they have no conflicts of interest.

Notes

CONFLICT OF INTEREST

The researchers claim no conflicts of interest.

FUNDING

None.

AUTHOR CONTRIBUTION

Conceptualization, JC; Data curation, HHCH, PLO, PA, SLA, NBMS, PYSY, NBA, JPL, LWS, JC; Investigation, PLO, PA, SLA, NBMS; Methodology, JC; Project Administration, PYSY; Supervision, JC; Writing-original draft, HHCH; Writing-review & editing, HHCH, JPL, NBA, LWS, JC.